-

How does it work?

The AI application senses different parts of the user’s face such as eyes, eyebrows, nose and mouth. It then interpret the shapes of these to determine which facial expression the person is most likely to have at the moment.

-

The purpose of this project was to allow the users, primarily teens, to gain insights and spark

reflections about themselves and their everyday lives.

We wanted to enable this through exploring a new way of interacting in games. Utilizing how facial

expressions connect to something most teens use on a daily basis; emojis.

Play together as two for the best experience.

Try to mimic the emotions that are displayed on screen, as an effort to collect points together!

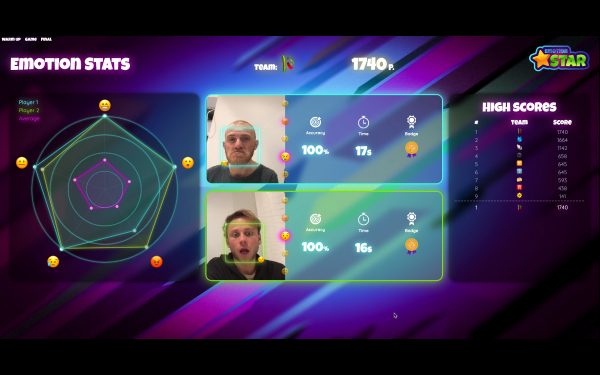

After the game look at your personal performance and learn what emotions you are an emotionstar at or which emotions you struggle with.

The AI application senses different parts of the user’s face such as eyes, eyebrows, nose and mouth. It then interpret the shapes of these to determine which facial expression the person is most likely to have at the moment.

In the first step of the game you get to practise your facial expressions, but be aware once you completed them all the game will start and every expression counts!

Now you have roughly 1minute to bring back home those sweet points, collect them all to beat highscores :O

View your teams and indivual performance, did you get a medal? We sure hope so. Just mimic the faces again to filter the data and you will find out.